|

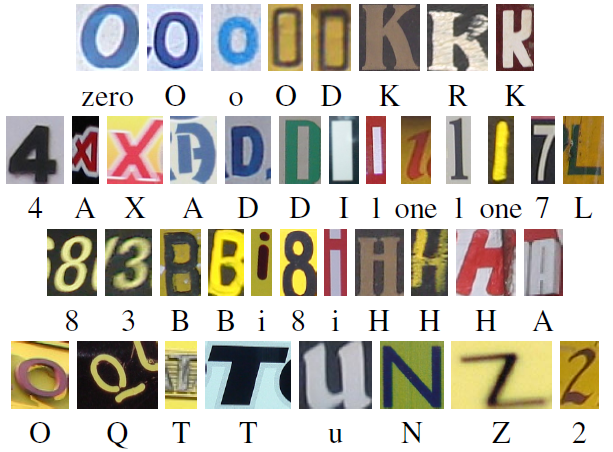

The Chars74K datasetCharacter Recognition in Natural Images |

[ jump to download ]

Note: this page branched off from the original page at the University of Surrey, which is in an old server behind robot blockers, hence I have copied it to here.

Character recognition is a classic pattern recognition problem for which researchers have worked since the early days of computer vision. With today's omnipresence of cameras, the applications of automatic character recognition are broader than ever. For Latin script, this is largely considered a solved problem in constrained situations, such as images of scanned documents containing common character fonts and uniform background. However, images obtained with popular cameras and hand held devices still pose a formidable challenge for character recognition. The challenging aspects of this problem are evident in this dataset.

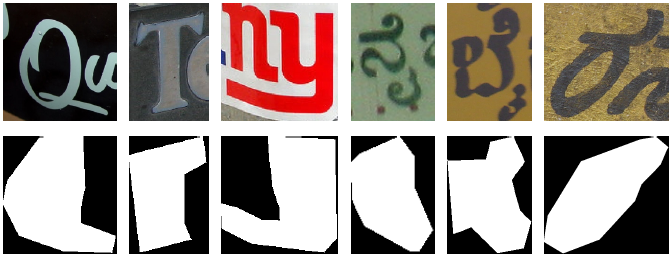

In this dataset, symbols used in both English and Kannada are available.

In the English language, Latin script (excluding accents) and Hindu-Arabic numerals are used. For simplicity we call this the "English" characters set. Our dataset consists of:

The compound symbols of Kannada were treated as individual classes, meaning that a combination of a consonant and a vowel leads to a third class in our dataset. Clearly this is not the ideal representation for this type of script, as it leads to a very large number of classes. However, we decided to use this representation for our baseline evaluations present in [deCampos et al] as a way to evaluate a generic recognition method for this problem.

The following paper gives further descriptions of this dataset and baseline evaluations using a bag-of-visual-words approach with several feature extraction methods and their combination using multiple kernel learning:

T. E. de Campos, B. R. Babu and M. Varma.

Character recognition in natural images.

In Proceedings of the International Conference on Computer

Vision Theory and Applications (VISAPP), Lisbon, Portugal, February 2009.

Bibtex |

Abstract |

PDF

Follow this link for a list publications that have cited the above paper and this link for papers that mention this dataset.

Disclaimer: by downloading and using the datasets below (or part of them) you agree to acknowledge their source and cite the above paper in related publications. We will be grateful if you contact us to let us know about the usage of the our datasets.

Maps/Kannada/Img/map.mat

contains a MatLab cell array of 990 elements. Each cell contains a Kannada character (in Unicode)

and its position in the array is the class number. So, given Kannada character (in a variable called input,

to find its class number is you need to do class_number = find(strcmp(input, map),1);

list_English_Img to load the lists to the memory.list.TRNind(:,end) (so please copy the result of this to a variable), i.e., it is defined by the last split of training data.

Note that sum(list.TRNind(:,end)>0)/list.NUMclasses results in 15, confirming that there are 15 training samples per class.list.TSTind(:,end) (Note that here the index end can be replaced by any valid number because the columns of list.TSTind are repetitions of the same list. This is because

we have fixed the test set for all the experiments with 15 samples per class.)list.ALLlabels(list.TRNind(:,end)) for the training set and list.ALLlabels(list.TSTind(:,end))

for the test set. Again, note that for each class label, there should be 15 samples, i.e., sum(list.ALLlabels(list.TSTind(:,end))==x) results in 15 for x=1:list.NUMclasseslist.ALLnames(list.TRNind(c,end),:) for training and list.ALLnames(list.TSTind(c,end),:) for testing, where c is the index that iterates through the samples (from 1 to 930 in the case of this experiment, but it can be bound by class labels, depending on how you implement your experiment). Note that list.ALLnames does not include the file extension (png) and the absolute path of the images, you need to append these to the string.list.VALind and list.TXNind)

(In addition to the ones shown following the links above)

This dataset and the experiments present in the paper were done at Microsoft Research India by T de Campos, with the mentoring support from M Varma. Additional SVM and MKL experiments were performed by BR Babu.

We would like to acknowledge the help of several volunteers who annotated this dataset. In particular, we would like to thank Arun, Kavya, Ranjeetha, Riaz and Yuvraj. We would also like to thank Richa Singh and Gopal Srinivasa for developing some of the tools for annotation (one of the tools used is described here). We are grateful to CV Jawahar for helpful discussions.

We thank the CVSSP/Surrey for hosting this web page. T de Campos also thanks Xerox RCE for the support while he finalized the paper.